For the STOR608 course here at STOR-i we covered several research areas as part of the topic sprints as discussed in my first blog post: “My First Term as a STOR-i MRes Student”. My favorite of these was the sprint lead by David Leslie titled Statistical Learning for Decision. For this I focused my research on the use of Thompson Sampling to tackle the multi-armed bandit problem. This will be the topic of this blog post.

Multi-armed bandits provide a framework for solving sequential decision problems in which an agent learns information regarding some uncertain factor as time progresses to make more informed choices. A common application for this is in slot machines, where a player must select one of K arms to pull.

A K-armed bandit is set up as follows: the player has K arms to choose from (known as actions), each of which yields a reward payout defined by random variables. These rewards are independently and identically distributed (IID) with an unknown mean that the player learns through experimentation.

In order to experiment, the player requires a policy regarding which action to take next. Formally a policy is an algorithm that chooses the next arm to play using information on previous plays and the rewards they obtained (Auer et al., 2002).

When making the decision on which arm to pull next, the policy must weigh up the benefits of exploration vs. exploitation. Exploitation refers to choosing an arm that is known to yield a desirable reward in short-term play. Whereas, exploration is the search for higher payouts in different arms, this can be used to optimize long term reward.

In order to quantify a policy’s success, a common measure known as regret is used. The regret of a policy over \(T\) rounds is defined as the difference between the expected reward if the optimal arm is played in all \(T\) rounds and the sum of the rewards observed over the \(T\) rounds.

Thompson sampling (TS) is one of the policies used for tackling multi-armed bandit problems. For simplicity we will consider a case where the rewards are binary i.e. they take the values 0 (for failure) or 1 (for success), however TS can be extended to rewards with any general distribution.

Consider a multi-armed bandit with \(k\in\{1,\ldots,K\}\) arms each with an unknown probability of a successful reward \(\theta_k\). Our goal is to maximize the number of successes over a set time period.

We begin by defining a prior belief on our success probabilities which we set to be Beta distributed. Each time we gain an observation we update this prior belief by updating the parameters of the beta distribution and use this updated distribution as a prior for the next arm pull.

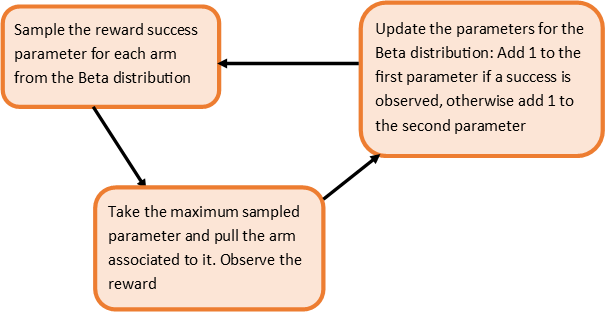

The process for deciding which arm to pull at each time step using the Thompson Sampling algorithm is given in the following diagram:

Although here we have specified a Beta distribution, a distribution of any kind can be used. However, in this case it is a convenient choice as it’s conjugate to the Bernoulli distribution and hence the posterior is also Beta distributed. This is why we only need to update the parameters at each time step. If the prior chosen was not conjugate, then it would not be so simple. Instead we may be in a situation where we need to specify a completely new prior each time.

The prior chosen plays a large role in the effectivenss of the Thompson Sampling algorithm, and thus care must be chosen over its specification. Ideally it should be chosen to describe all plausible values of the reward success parameter.

In the slot machine case, the player often has no intuition on the values of this success parameter. This is usually described using an uninformative Beta(1,1) prior as it takes uniform value across the entire [0,1] range. This promotes exploration to learn which arm is optimal.

In the opposite case where we have some knowledge of the true values of the success parameter, we may center the priors about these values in order to reduce regret. Here, we require less exploration to identify the optimal arm and so can exploit this to maximize reward.

Although TS tends to be efficient in minimizing regret, there are occasionally outlier cases where the regret is much higher than expected. This may happen if we begin by pulling the sub-optimal arm and receive a successful reward. We are likely to exploit this further and continue to pull this arm, falsely leading us to believe this arm is actually optimal. Or alternatively, we may begin by selecting the optimal arm and observe a failure reward. We then may exploit the sub-optimal arm under the false belief that it will give us a higher reward.

To conclude, Thompson Sampling is a simple but efficient algorithm for solving stochastic multi-armed bandit problems, successfully balancing exploitation and exploration to maximize reward and minimize regret.

References & Further Reading

Auer, P., Cesa-Bianchi, N., and Fischer, P. (2002). Finite-time analysis of the mulitarmed bandit problem. Machine Learning, 47(2):235-236.

Russo, D., Van Roy, B., Kazerouni, A., Osband, I., and Wen, Z. (2017). A tutorial on Thompson Sampling.