The week before last, we organised a two-day workshop, ‘Exploring Artificial Intelligence for Humanities Research’, hosted by the Lancaster University Digital Humanities Hub in collaboration with tagtog, and funded by the ESRC IAA Business Boost.

Tagtog is an online Artificial Intelligence platform that uses Natural Language Processing and Machine Learning for the automated annotation of documents. The idea for this workshop stemmed from a collaboration between the TAP-ESRC project ‘Digging into Early Colonial Mexico’ (DECM) and tagtog, where tagtog is being used to assist in the annotation and extraction of information from historical documents which predominantly feature sixteenth-century Spanish, but also includes indigenous languages such as Nahuatl, Mixtec, and variants of Mayan. Natural Language Processing and Machine Learning are continually evolving fields, and humanities research which employs tools from these disciplines present new and interesting challenges.

The workshop brought together experts from numerous fields in both the humanities and computer sciences, with the aim of addressing the questions and problems that we encounter in Digital Humanities research, exploring the ways in which we can try to resolve these issues through collaborative working.

Our first day featured a variety of case study presentations by humanities researchers at Lancaster University:

Patricia Murrieta Flores

Towards the identification, extraction and analysis of information from 16th century colonial sources

In this talk, Patricia explored the ways in which we are identifying, extracting, and analysing information in the Digging into Early Colonial Mexico project. This project is creating and developing novel computational approaches for the semi-automated exploration of thousands of pages of sixteenth-century colonial sources. These sources, known as the Relaciones Geográficas de la Nueva España, are a series of geographic reports which contain a great variety of information about local areas across New Spain, and this project will enable new ways of accessing and analysing the data within. You can read more about how this project has been using tagtog for corpus annotation here.

Clare Egan

Using the Records of Early Modern Libel for Spatial Analysis

Clare gave us an introduction to the world of medieval and early modern defamation, with a focus on verse libels. These libels contain a great deal of information, including many spatial references which, with computational methods, could be identified automatically. The records of libel are not digitised, however, work is underway to photograph and transcribe the handwritten sources. The aim of transcribing this handwritten material is to convert it into machine-readable text, which will then allow the process of computational analysis to begin. Extracting data from these sources would enable new analyses of, and new ways of spatially representing, the rich information contained within

Anna Mackenzie

TagTogging Time Lords: using AI and computational methods in developing the first annotated Doctor Who corpus

In her talk, Anna demonstrated how she has started the process of annotating episode scripts of Doctor Who, with the aim of developing the first annotated Doctor Who corpus. As a science-fiction corpus, these scripts feature references to unique locations, items, species, and concepts, some of which exist only in the Doctor Who universe. As such, the annotation and subsequent analysis of these scripts present unique challenges to methods in computational text analysis. With over 750 episodes-worth of material, computational analysis of this expansive corpus could offer new insights into how various themes/concepts have been portrayed during the seven decades over which the series has been running.

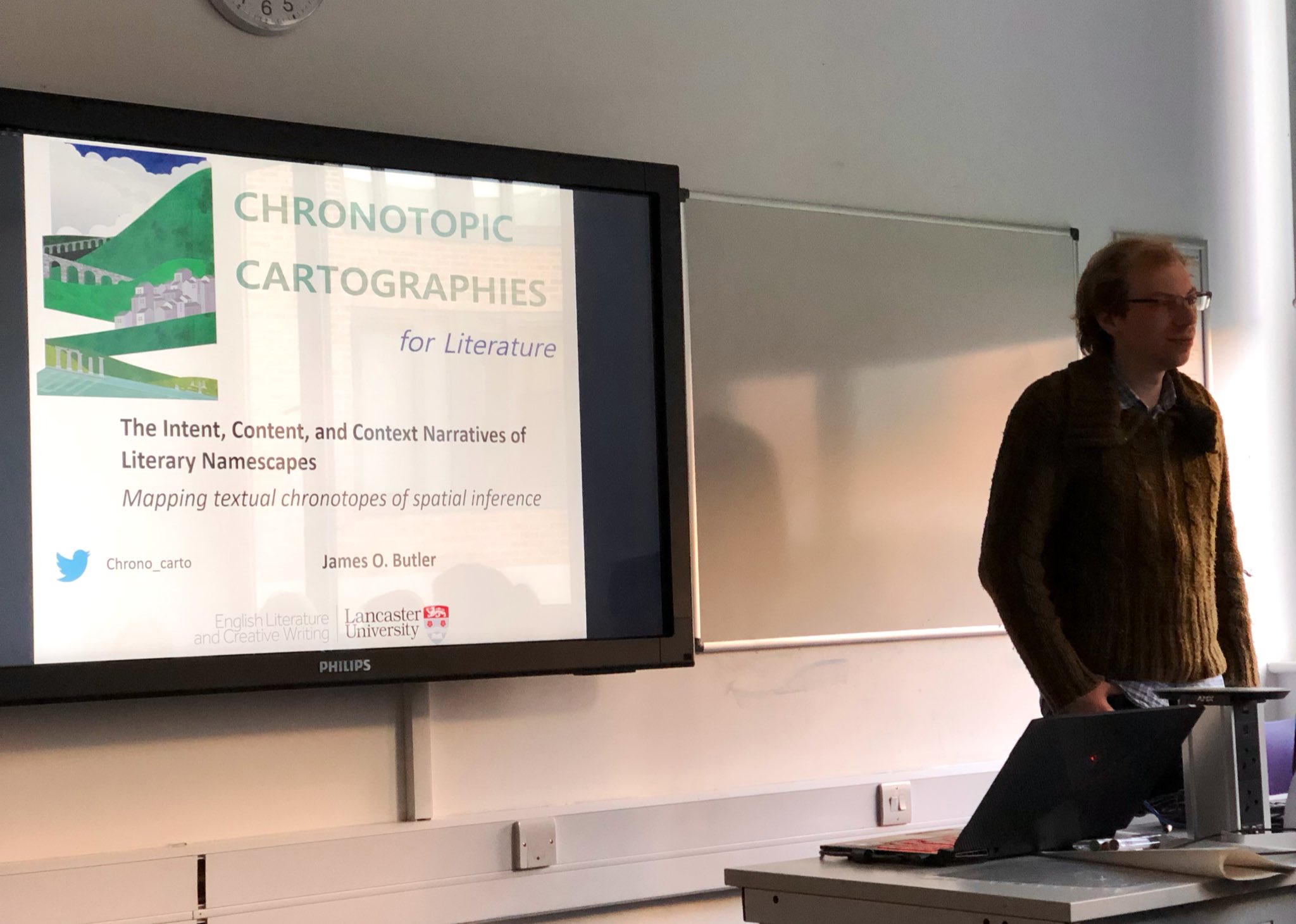

James Butler

The Intent, Content, and Context Narratives of Literary Namescapes: Mapping spatial inference

James’ talk gave us an introduction to the ways in which the Lancaster University research project, Chronotopic Cartographies, is investigating ways of using digital tools to analyse, map, and visualise the spaces of literary texts. References to fictional spaces which cannot be geographically located pose interesting challenges for computational analysis of text. James, with the Chronotopic Cartographies team are exploring ways of tackling this problem. James is also working on refining name roles in order to better contextualise their usage within fiction, which will enable more complex understandings and analyses of these texts.

Raquel Liceras Garrido

Archaeological Reports: The case of Numantia

Raquel presented the potential for using computational text analysis for extracting information from historic archaeological reports, with specific reference to the case of Numantia, a site of significant archaeological significance in North-central Spain. There were a series of excavations in the period between 1906 and 1923, which produced a series of reports with crucial spatial, stratigraphic and material textual information. Automatic extraction of information contained within these reports would enable new exploration of the spatial distributions, stratigraphy and materials of this site.

Deborah Sutton

Mapping the Eighteenth-century Carnatic through Digitised Texts

In this talk, Deborah introduced us to cartographies of the eighteenth-century Carnatic (southern India), and some contemporaneous English texts produced in relation to military campaigns, alliances and conquests. These texts make spatial references both in terms of topography and in relation to the value of lands seized through conquest. Computational analysis of these texts would allow these texts to be mapped out and related to living landscapes and allow the relationship between English texts and Indian nomenclatures to be explored.

James Taylor

Money talks: the language of finance in the nineteenth-century press

James presented the case of analysing financial columns in the nineteenth-century press, exploring the great variety of information which could be extracted from these texts. Whilst these newspapers have been digitised, automatically isolating the specific sections of the text which feature the financial columns will be the first challenge for extracting the relevant data. Once extracted, analysing these texts could offer the potential for new insights into the way financial information was presented in these nineteenth-century financial columns, as well as how they referred to broader news and themes.

Ian Gregory

Geographical Text Analysis

In this final talk, Ian explained the processes used for computational text analysis of a corpus of Lake District writing, which was employed during a five-year project at Lancaster University from 2012-2016: Spatial Humanities: Texts, GIS & Places. The corpus consisted of 80 texts published from 1622 to 1900, amounting to 1.5 million words. The text was annotated using an XML schema, and place names extracted and geoparsed, producing a Geographic Information System which could then be used to visualise aspects of data contained within the text. For example, displaying the co-occurrence rate of the word ‘beautiful’ with the identified place-names. This approach enabled a great deal of information to be extracted and analysed, but there is still progress to be made with these computational methods.

The second day was hosted by Juan Miguel Cejuela and Jorge Campos of tagtog, with a presentation exploring Machine Learning and Natural Language Processing. The slides from this presentation can be viewed here. This was followed by a hands-on session which introduced participants to using the tagtog platform for automated annotation of documents, exploring the ways in which this approach could aid humanities research.

If you’re interested in using tagtog, but you’re not sure where to start, they have some quick tutorials on their website which give just a few examples of the ways in which their tool can be used to computationally analyse and extract data from textual information.

Find out more about tagtog: tagtog.net | Twitter | Medium

These two days were a fantastic opportunity to get together with researchers in the humanities and computer sciences, exploring the different ways in which we can work together. We heard about some fascinating Digital Humanities projects and learned a great deal from Juan Miguel and Jorge at tagtog about how Machine Learning and Natural Language Processing work, as well as how best to utilise their wonderful annotation platform at tagtog.net.

We hope to put together some more opportunities for workshops like this – keep an eye out for Lancaster University Digital Humanities Hub updates: Twitter | Website