Our core construction is a latent Gaussian process (GP) backbone, used to represent unobserved quantities across an entire spatiotemporal domain. The GP is the standard tool in emulation of computer simulation code and as a latent construct in spatial statistics; we will extend both frameworks, and coalesce them into an inference framework spanning from process models through to heterogeneous, sparsely observed, and possibly extreme, spatiotemporal data.

Building on state of the art spatiotemporal modelling, we will develop more effective models for spatial data; incorporate seasonality, anisotropy, model misspecification into the GP framework, extending existing semi-parametric bivariate methods; and adapt inference techniques to handle observation process mechanisms (preferential/irregular sampling).

In emulation we will primarily exploit existing approaches; we will emulate processes described as systems of stochastic differential equations, potentially including missing data; we will also compare our data-driven spatiotemporal models with process models and use discrepancies to improve strategies for sampling design.

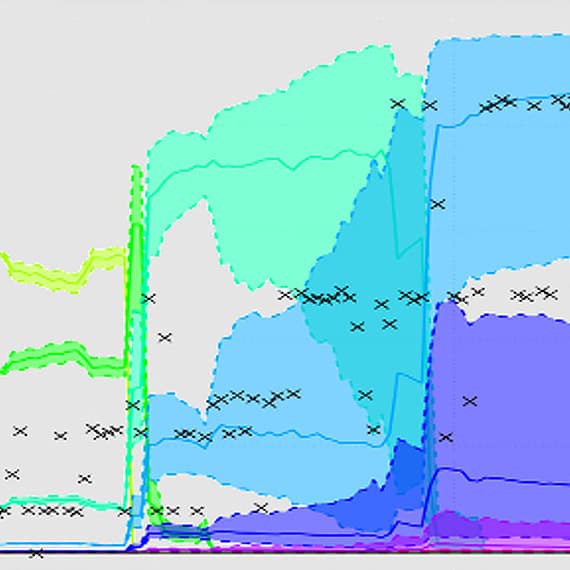

Downscaling is central to relating process models to data. We will build on significant recent advances in multi-layered statistical downscaling to provide a stochastic integration engine that allows multi-scale data fusion and analysis, accounting, in a principled way, for measurement and sampling processes embedded in space-time data and process models.

Environmental data exhibit spatiotemporal extreme value structure. Current models for such data exhibit a strong form of dependence, termed asymptotic dependence, characterised by all locations exhibiting simultaneous extreme values. These models are liable to give poor estimates (biased and with under-estimated variance) of risk associated to environmental extremes. We will develop a novel framework to escape this problem, combining the strategies of max-stable processes with the conditional extremes perspective. GP model inference will be key here, with inference problems linked to the process being censored to a threshold below which the asymptotic arguments are not expected to hold.

The previously described techniques are important in their own right, but the step change in capability for the environmental sciences will be obtained by combining these building blocks to make inference with multiple disconnected information sources. We will develop a statistical framework for integrating scientific knowledge, combining appropriate deterministic, stochastic and data models, to enable a full progression of uncertainty through the different modular elements of an analysis. Initially we will develop core skills for this approach through a series of methodological case studies focused on the challenge themes.