Methodological Themes

Integrated Statistical Modelling

There are major weaknesses in the current methodology for integrating process knowledge and statistical science. Impressive scientifically-informed models in the environmental sciences are frequently let down by a naive approach to inference, leading to poor predictive performance, and an inability to properly assess uncertainty in estimates and predictions. Similarly, strong statistical modelling is typically undermined by ignoring known physical processes.

This theme will develop a suite of inference and prediction techniques for environmental problems, bringing together existing technology and new developments in a modular framework.

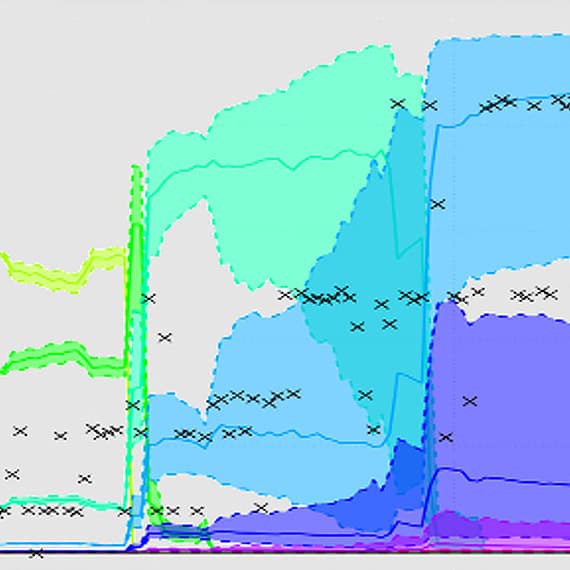

Our core construction is a latent Gaussian process (GP) backbone, used to represent unobserved quantities across an entire spatiotemporal domain. The GP is the standard tool in emulation of computer simulation code and as a latent construct in spatial statistics; we will extend both frameworks, and coalesce them into an inference framework spanning from process models through to heterogeneous, sparsely observed, and possibly extreme, spatiotemporal data.

Building on state of the art spatiotemporal modelling, we will develop more effective models for spatial data; incorporate seasonality, anisotropy, model misspecification into the GP framework, extending existing semi-parametric bivariate methods; and adapt inference techniques to handle observation process mechanisms (preferential/irregular sampling).

In emulation we will primarily exploit existing approaches; we will emulate processes described as systems of stochastic differential equations, potentially including missing data; we will also compare our data-driven spatiotemporal models with process models and use discrepancies to improve strategies for sampling design.

Downscaling is central to relating process models to data. We will build on significant recent advances in multi-layered statistical downscaling to provide a stochastic integration engine that allows multi-scale data fusion and analysis, accounting, in a principled way, for measurement and sampling processes embedded in space-time data and process models.

Environmental data exhibit spatiotemporal extreme value structure. Current models for such data exhibit a strong form of dependence, termed asymptotic dependence, characterised by all locations exhibiting simultaneous extreme values. These models are liable to give poor estimates (biased and with under-estimated variance) of risk associated to environmental extremes. We will develop a novel framework to escape this problem, combining the strategies of max-stable processes with the conditional extremes perspective. GP model inference will be key here, with inference problems linked to the process being censored to a threshold below which the asymptotic arguments are not expected to hold.

The previously described techniques are important in their own right, but the step change in capability for the environmental sciences will be obtained by combining these building blocks to make inference with multiple disconnected information sources. We will develop a statistical framework for integrating scientific knowledge, combining appropriate deterministic, stochastic and data models, to enable a full progression of uncertainty through the different modular elements of an analysis. Initially we will develop core skills for this approach through a series of methodological case studies focused on the challenge themes.

Machine Learning and Decision-Making

Statistical approaches in environmental science have generally been custom-built for a particular model, with a constrained data type, and without considering the full down-stream usage of the inference. We will enhance model-based statistics with modern machine learning techniques to ensure they scale to modern heterogeneous data sources and end-user requirements, building on the GP backbone to develop computational techniques for large volumes of data, interface agent-based modelling frameworks with traditional process models, and explicitly include decision-making and active data selection.

We will further develop recent advances in scalable computing technologies to address the significant challenges posed by large-scale integrated modelling. At its simplest, this will involve extending recent advances in parallelising spatial operations and deploying them within the R software environment. Improving general Markov chain Monte Carlo (MCMC) inference for spatial datasets by parallelising the fast Fourier transform on graphics processor units will also substantially improve the ability of all such methods to be deployed at scale. Related to this is the improvement of MCMC schemes through subsampling of the data, to get an unbiased estimate of the gradient of a Langevin diffusion; we will further develop this framework to encompass adaptive subsampling of heterogeneous data, and automatic gradient estimation. These innovations will be deployed within the framework of parallel MCMCs on subsets of the data, specifically designed around fitting parallel Gaussian process models.

Many process models are large scale, setting boundary conditions for downscaling. However, there is also a need for local process modelling to sit between the latent framework and the data, such as an agent-based model of land use decision-making, connecting climate models and data. These models present an opportunity both to properly interpret non-aggregated data types and to present simulation-based scenario planning. We will develop methods to calibrate these fine-scale process models to data, both in isolation and (more importantly) when considered as a component of an integrated modelling framework. Approaches to be considered will include approximate Bayesian computation, stochastic variational inference and Bayesian optimisation.

All of our inferential innovations are co-designed with end users and therefore implicitly support decisions. However statistical methods commonly stop at the point of delivering the inference engine. Instead, we will develop decision-making support for both inference and science. We will deploy Bayesian optimisation (BO) to efficiently explore the parameter space of process models, trading off learning about new areas of parameter space versus refining estimates in promising areas of parameter space. Furthermore, when building emulators from existing repositories of model output, active learning based on contextual bandits will sequentially select witness points for GPs to minimise the computational complexity. As well as using existing BO procedures, we will develop methods to choose a set of parallel runs of a process model instead of a purely sequential approach. The same decision-making technologies will also be deployed directly to support scientists’ decisions over where to gather more data; once again the challenge is, given current beliefs and their uncertainties, to find a maximally informative location.

Virtual Lab Development

This theme will focus on the development and deployment of virtual labs as a key instantiation of the integration of heterogeneous data and models that is central to our project. Virtual labs are also a key component of our Pathways to Impact. The virtual labs will be cloud-based; initial experiments will be carried out in a private cloud environment at Lancaster University, with mature software migrated to JASMIN as part of the Data Labs Initiative, to make it available to broader environmental communities.

We will develop an integrated platform for storing and processing heterogeneous data in a cloud environment. Importantly, this will incorporate a cloud broker to provide independence from underlying providers and allow computations to be executed on the most appropriate platforms. This potentially enormous task is feasible since building blocks are available in the cloud, and we can leverage other CEH and Lancaster initiatives in pilot virtual laboratories.

We will develop software frameworks to support model integration as a key intellectual output and significant advance in the state-of-the-art in virtual labs. This will support all aspects of model integration. Consequently, the framework will support the execution of integrated modelling experiments, involving arbitrary configurations of process and data models. The framework will ensure interoperability across different models, using a RESTful approach, but will go significantly beyond (syntactic) interoperability and ensure semantic integration, allowing end-to-end reasoning about uncertainty. As a final stage, we will look to automatically deploy and manage complex model runs from a high-level description of that particular lab, building on the experience of model-driven engineering and domain-specific languages derived from the Models in the Cloud project.

We will provide tools to support decision making, targeting a range of audiences. This will include an ability to interrogate and query the underlying data, the live execution of model runs on the cloud and the subsequent visualisation of data or model output. We plan to go significantly beyond this by supporting scenarios as first class (and persistent) entities in the virtual lab, which can be developed, stored and explored, allowing ‘what if’ investigations. Finally, we plan to provide support for Jupyter notebooks for creating and sharing notebooks containing live code execution, and to explore the role of such technologies in documenting science and decision-making, thus providing specific mechanisms for open science.