AI4EO: a new frontier to gain trust into the AI

Towards explainable AI for Earth Observation

Towards explainable AI for Earth Observation

The project Towards Explainable AI for Earth Observation is part-funded by the Phi Lab of the European Space Agency (ESA) as part of its initiative/challenge AI4EO which aims to bridge the Earth Observation (EO) with the AI, AIE4O.

The primary aim of this project is to study and develop new methods for explainable and interpretable-by-design deep learning methods for flood detection.

AI techniques are now widely used for Earth Observation (EO). Among these, deep learning (DL) methodologies are particularly noteworthy due to their ability to achieve state-of-the-art results. However, these models are often characterized as being “black-box” due to the lack of causal link between the inputs and the outputs and the billions of trainable parameters with no direct link to the physical nature of the inputs, which hampers the interpretability of the decision-making process for human users. Another problem of the current state-of-the-art deep learning is the hunger for large amounts of labeled input data, compute resources and the related energy and time.

The aim of this project is to develop new methods that benefit from the highly accurate deep learning, but which also offer human-interpretable models, and decision-making and require significantly less computational resources and ultimately to apply these to hyperspectral data such as Sentinel-2.

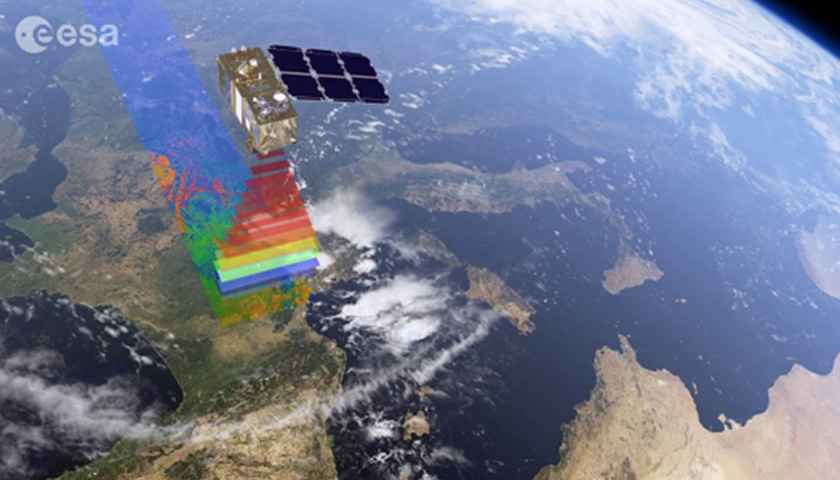

The Sentinel-2 satellite works as shown in the figure, and it is equipped with an innovative wide-swath high-resolution multispectral imager which could return 13 spectral bands.

In summary, our aim is to develop and use interpretable-by-design deep learning techniques, specifically tailored towards flood detection and flood prediction.

Flood detection requires semantic segmentation for allocating a class label to each multidimensional pixel. Thus, several semantic segmentation models were used and studied. For example:

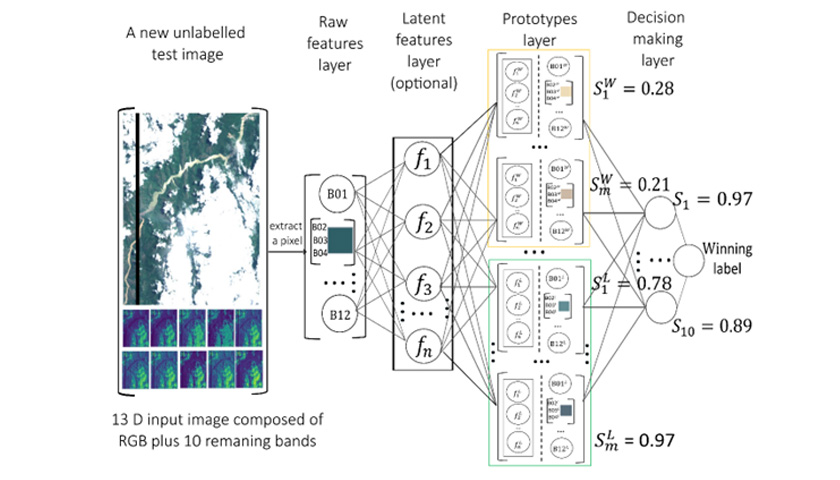

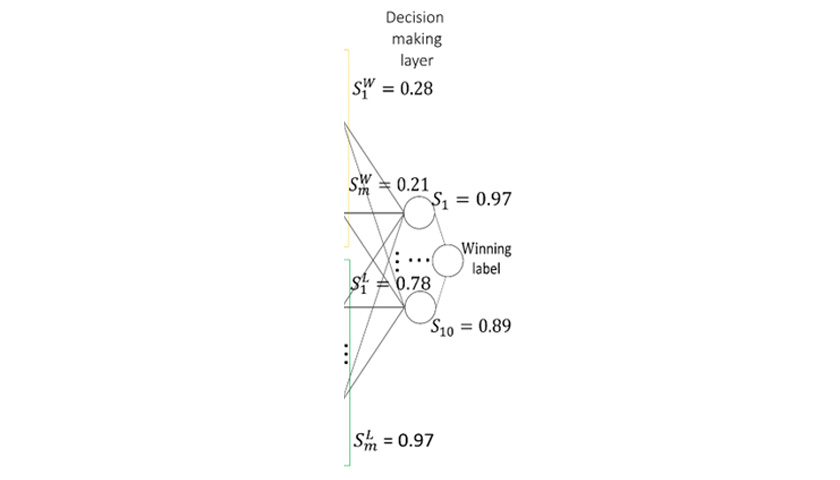

The validation architecture of IDSS illustrates the decision-making process of IDSS.

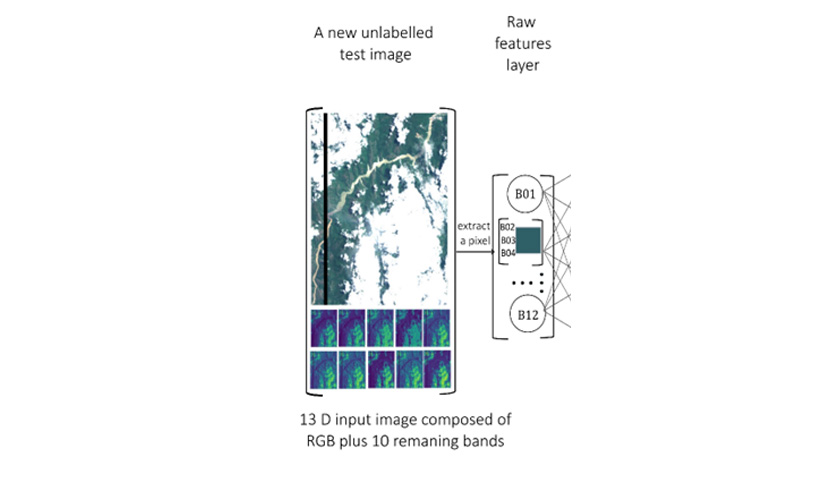

Each Sentinel-2 hyperspectral image has 13 bands, compared to the RGB images that could be visualized by human eyes, it also contains 10 other bands which could be visualized. When a test hyperspectral image comes in, the raw features layer is responsible for extracting each 13-band pixel value from the hyper-spectral image.

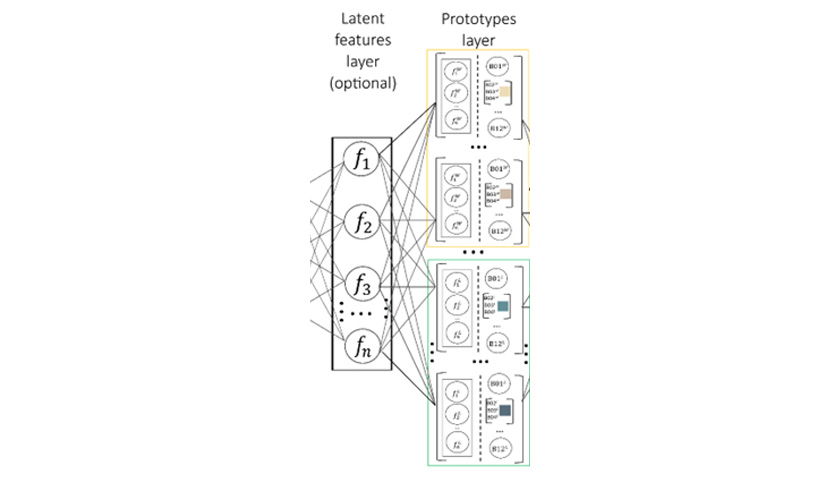

Then, the latent feature layer is used to extract the corresponding 64 features for the same pixel from the U-Net neural network. Furthermore, 64 features and corresponding 13 raw features are used to compare with the prototypes which are the most representative pixels selected from training data. In this case, there are 3 classes, land, water, and cloud.

For each class, there are 500 prototypes selected from training data. The similarity score is calculated within this step.

Finally, the similarity score is compared at the decision-making layer and the final winning/prediction label is obtained through the K nearest neighbor methods (K = 10 in this case).

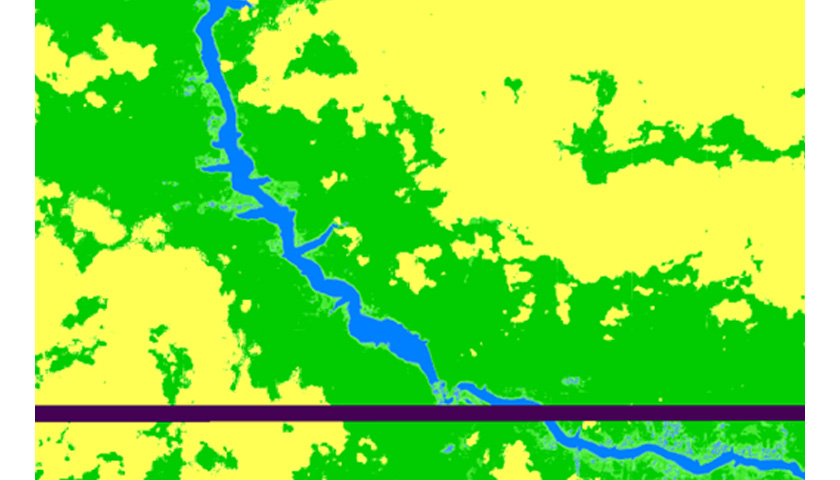

Visual (heat map of confidence), where a lighter colour means that the pixel has a lower confidence score while a darker colour means a higher confidence score.

| Model | IoU total water % | Recall total water % | Parameters |

|---|---|---|---|

| IDSS 1 (ours) | 73.10 | 93.35 | 96 |

| IDSS 2 (ours) | 70.03 | 96.11 | 96 |

| xDNN | 71.50 | 90.27 | 20 |

| U-Net | 72.42 | 95.42 | 7790 |

| SCNN | 71.12 | 94.09 | 260 |

| NDW1 | 64.87 | 95.55 | - |

| Linear | 64.87 | 95.55 | - |

| NDWI2 | 39.33 | 44.84 | - |

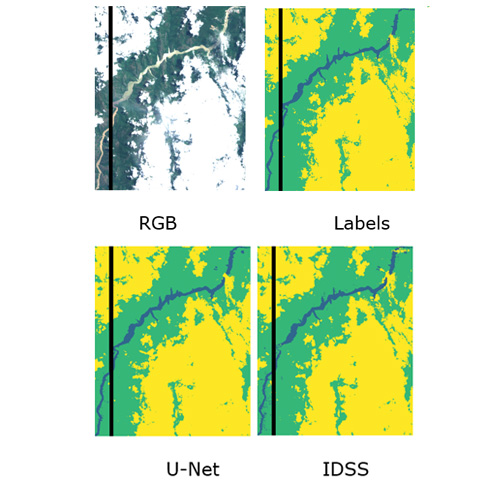

This figure illustrates a comparison between RGB satellite, Labels, U-Net, and IDSS image captures. In the Labels, U-Net and IDSS, green represents land, yellow represents clouds, and blue represents water.

Zhang, Z., Angelov, P., Soares, E., Longepe, N., & Mathieu, P. P. (2022, October). An Interpretable Deep Semantic Segmentation Method for Earth Observation. In 2022 IEEE 11th International Conference on Intelligent Systems (IS) (pp. 1-8). IEEE.