About the project

The project Towards Explainable AI for Earth Observation is part-funded by the Phi Lab of the European Space Agency (ESA) as part of its initiative/challenge AI4EO which aims to bridge the Earth Observation (EO) with the AI, AIE4O.

Project Aims

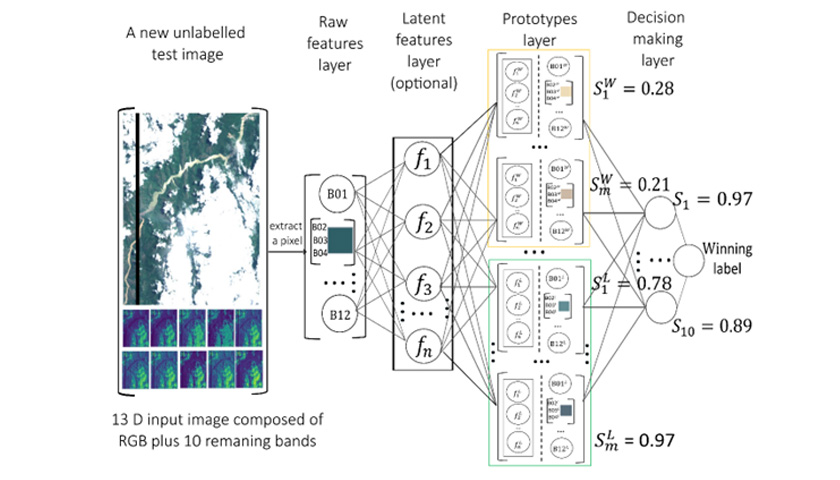

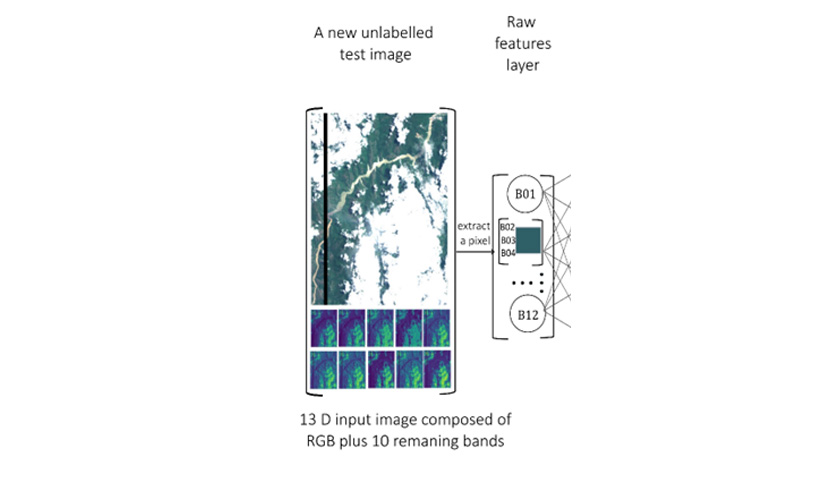

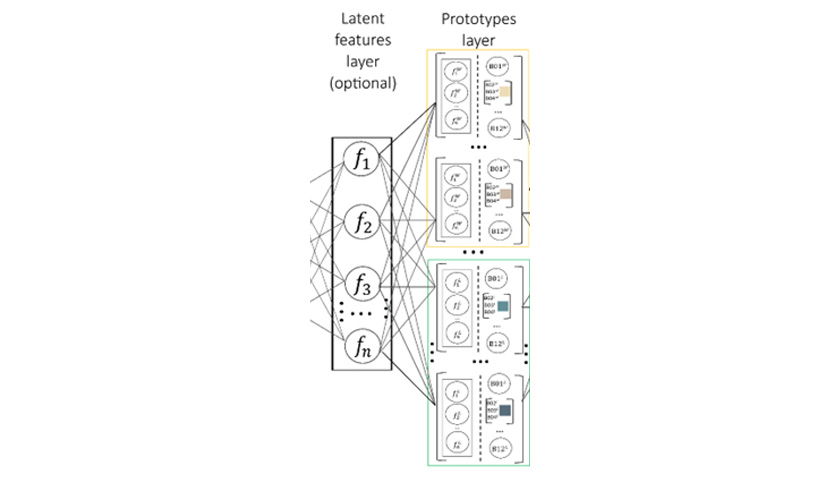

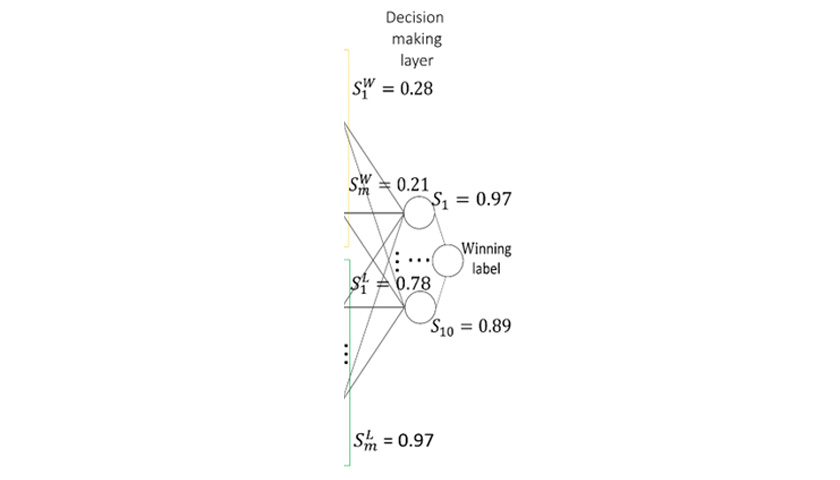

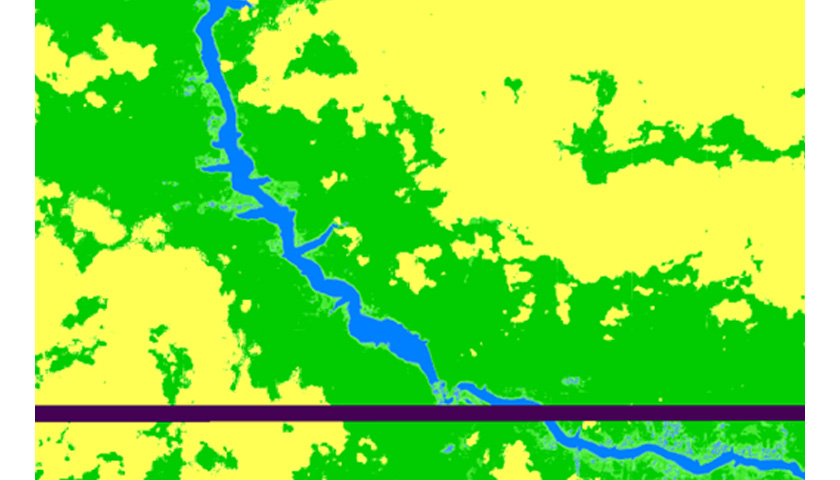

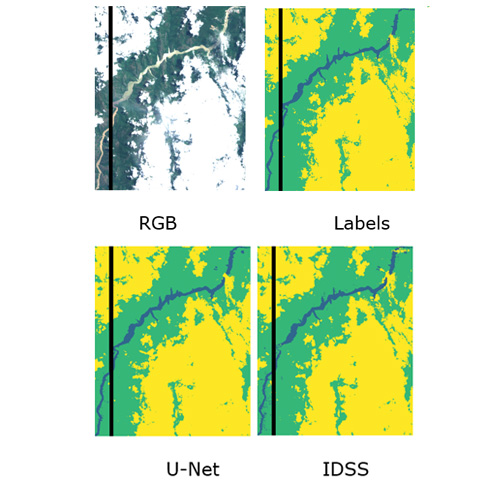

The primary aim of this project is to study and develop new methods for explainable and interpretable-by-design deep learning methods for flood detection.

AI techniques are now widely used for Earth Observation (EO). Among these, deep learning (DL) methodologies are particularly noteworthy due to their ability to achieve state-of-the-art results. However, these models are often characterized as being “black-box” due to the lack of causal link between the inputs and the outputs and the billions of trainable parameters with no direct link to the physical nature of the inputs, which hampers the interpretability of the decision-making process for human users. Another problem of the current state-of-the-art deep learning is the hunger for large amounts of labelled input data, compute resources and the related energy and time.